Where I've been: Building a business, serving clients, helping leaders navigate AI strategically rather than reactively. Doing the work while the cultural conversation swirled.

Why I'm back: The headlines are shrieking about AI job displacement, and maybe you’re thinking about applying for a janitorial position at Skynet HQ. Before we all start panic-enrolling in Prompt Engineering for Dummies, let's examine what's really happening beneath the surface.

This new post is long because it needs to be. Especially over the last few weeks, people have been bombarded with puffery and confusing information. AI tools are incredibly useful. If you’re not using them, you should consider at least learning more about them. But the field is crowded with marketing hype. So let’s cut through the noise.

TL;DR: With each passing week, you’re told that the AI singularity is near, and your jobs are at risk. AI doomers like Anthropic's Dario Amodei predict mass job displacement because they make two fatal errors: (1) they view the world in material terms and they think consciousness is just computation, thus machines can replicate human minds, and (2) they treat the economy like a static machine instead of a living system that adapts to technological change. The first mistake stems from materialist philosophy that reduces humans to "meat computers." The second stems from static “central planner” thinking that misunderstands how dynamic economies actually work.

The Materialist's Error

Dario Amodei connects to the Effective Altruism (EA) movement, which seems to frame much of his public thinking. His warnings echo a worldview shaped by EA's utilitarian calculus. It’s the same philosophy that gave us the con man Sam Bankman-Fried. Effective Altruists have seriously proposed prioritizing asteroid defense over current poverty relief, and redirecting resources to ensure the survival of hypothetical future civilizations over helping real people today.

The materialist strain running through EA sees humans as biological computers: organic processors running wetware code, where thoughts are calculations and consciousness is complexity's side effect. It's a reduction that makes uploading your soul sound like a software update.

Amodei is a little more careful than many who share his views, and he peppers his AI doom prophecy with some amount of optimism. Yet his worldview reveals materialist assumptions (the idea that our reality consists only of matter and energy, and nothing else). Amodei describes AI as "smarter" than Nobel Prize winners (not more specialized or faster, but smarter), smuggling language that suggests sentience.

Remember, whenever you read or hear this sort of thing confidently asserted, that the “GPT” in ChatGPT stands for Generative Pre-trained Transformer. ChatGPT is trained on human information. It transforms that information into math. That’s extremely cool. Yet Large Language Models (LLMs) like ChatGPT do not understand the human information they have been fed.

Ironically, Apple just released a study that concludes current AI models present the “illusion of thinking,” a pitch-perfect riposte that happens to line up with the timing of this very post you’re reading. When AI models reach a threshold, Apple reveals, AI models ‘face a complete accuracy collapse [emphasis mine] beyond certain complexities.” (if you’ve used AI for any length of time, you’ve probably encountered this collapse repeatedly.)

And here is another key to understanding why the materialist worldview gets things wrong.

As an example, consider the pending “robopocalypse” of domestic humanoid robots doing household chores. Here’s the tell: When AI programming "inhabits" a robot, materialist technologists treat this as the same kind of embodiment as a human. “Human-like artificial intelligence needs a human-like artificial body,” trumpet the marketers behind the humanoid robot “Ameca.” Movies like Ex Machina present this as a fait accompli.

But controlling a mechanical gadget isn't the same thing as being an integral human being with a body. This kind of thinking confuses a computer interface with the interior life of a flesh-and-blood human being.

Technologists love to push the “magical” quality of new innovations, because as sci-fi author Arthur C. Clarke noted, “Any sufficiently advanced technology is indistinguishable from magic.” For many years, most of us were enamored of our “smart phones.” Recall that Steve Jobs called it a “magical product.” Now comes the backlash.

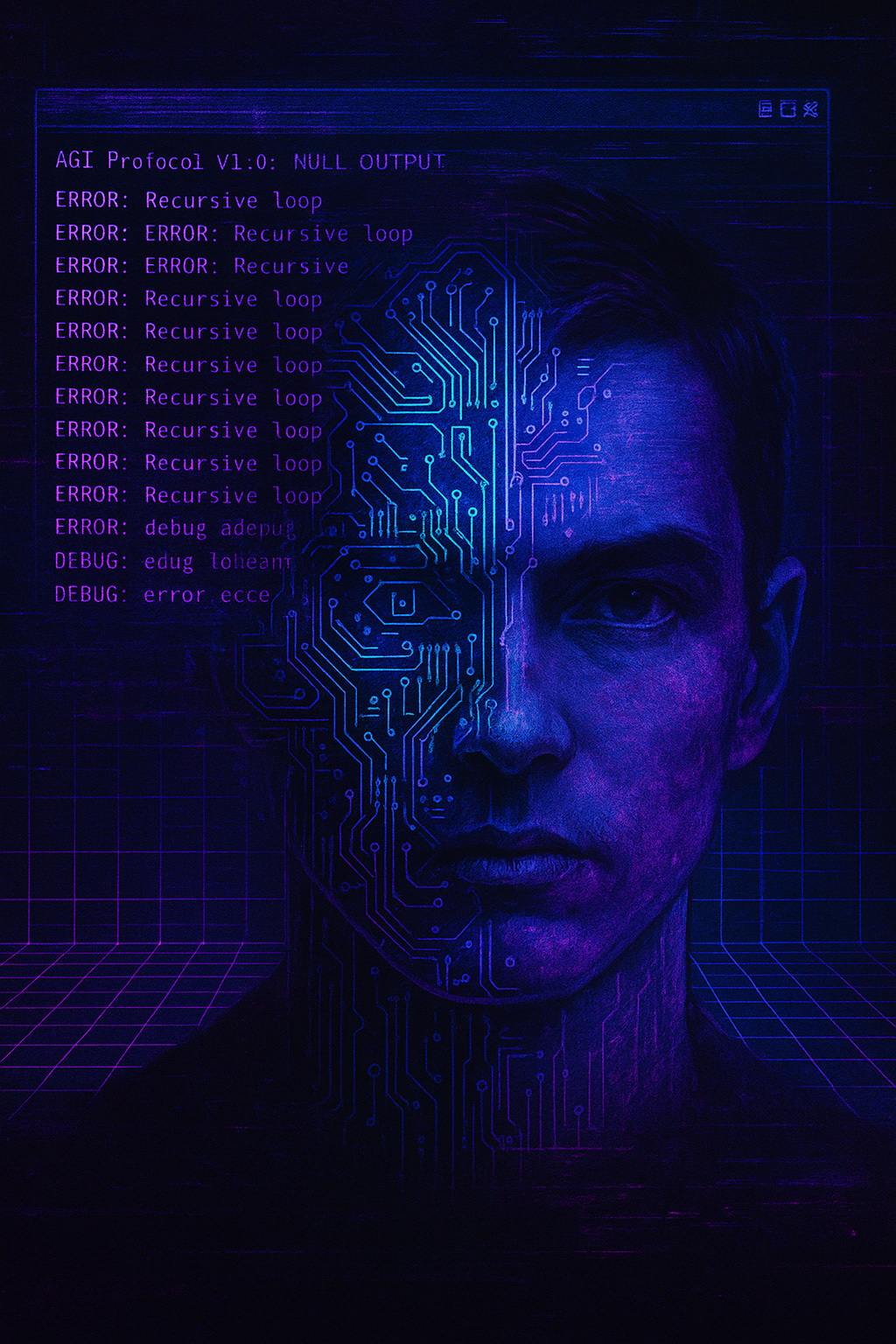

In the case of AI, these same awed pronouncements gloss over huge problems such as the feedback-loop and brittleness of large AI models: their tendency to create slop that feeds future slop, amplifying error as they eat their own tail. The idea that these systems can “self-improve” avoids the issue of data degradation that compounds as models ingest other models' outputs.

If you've used AI extensively, you've probably experienced that "Flowers for Algernon" moment (dazzling one second, Windows 95 desktop the next). Without real cognition or intention, self-referential training creates entropy. Coherence collapses under auto-generated noise.

The materialist crew embedded in Silicon Valley aren't prophets. They confuse imitation for incarnation and mistake simulation for soul. The idea that you can "upload a mind" or recreate sentience with bits and bytes reflects metaphysical blindness: reducing consciousness to three pounds of fat and water floating in your skull.

Human beings aren't meat puppets. We are not machines. Consciousness isn't a feature to toggle. You are not your brain.

The Soul the Simulacra Forgot

Amodei and his ilk never seem to acknowledge anything beyond the computational: not neuroplasticity's mysterious adaptability, not immaterial consciousness, not the ubiquitous human experience of the numinous.

Realities that aren't measurable, programmable, or reducible.

Neuroplasticity reveals brains as adaptive systems that rewire in response to experience and intention. This suggests that consciousness isn’t reducible to brain chemistry. When thoughts, choices, and attention reshape neural circuits, it implies a non-material agent—something beyond mere biology—exerts causal power. The brain's ability to rewire suggests something immaterial acting upon it—something directive and personal that points beyond materialism.

The EA/materialist framework has no room for mystery—no spiritual dimension, no metaphysical depth. Materialism assumes all human experience (emotion, thought, memory, moral conscience) reduces to physical processes:

You are your brain

Your brain is neurons firing

Neurons are atoms obeying physical law

Therefore, sufficiently complex machines could replicate you

This is a category error. Saying “thoughts are made of neurons” is like saying “a poem is made of ink.”

Consciousness is awareness, thinking about thinking, an interior reality that cannot be measured or simulated, but only experienced. As Thomas Nagel asked, "What is it like to be a bat?" The question illustrates that subjective consciousness cannot reduce to data points. This is why exponential computing power will never "wake up." Machines may compute, simulate, and replicate all without experiencing awareness. They cannot suffer, love, or know what it's like to be anything. Consciousness isn't math's end-product; it's the irreducible mystery that makes experience possible.

The bizarre transhumanist dream of uploading minds into computer clouds isn't science—it's a new myth in which soul becomes software, heaven becomes server farms, and salvation becomes successful upload. This isn't just bad philosophy; it's bad theology slamming into bad science fiction and rebranded as futurism.

The odds of technology that really “uploads” human minds—preserving your actual consciousness, not just copying your behavior—are near zero this century and vanishingly small even in the next. Probably next to zero after that. Meanwhile, headlines about scientists experimenting with ethically dubious “organoids” grown from fetal cells, which are said to “mimic” mini-brains, often leave out one glaring detail: they do not have consciousness.

The AGI (Artificial General Intelligence) hype machine depends on similarly mistaken thinking mixed with hype and clever evasion. It depends on a Western world steeped in materialist fables. Everyone freaks out after the latest doomer pronouncement not just because predictions are persuasive, but because materialist premises have taken root. We've been catechized into science-cloaked gospel where souls are optional.

The AGI Mirage

Those worried about or hopeful for AGI (Artificial General Intelligence) envision AI systems learning and reasoning across domains with human-like adaptability. Not just smarter chatbots, but cognitive engines capable of abstract reasoning and autonomous decision-making.

We're supposed to be awestruck by "agents” (AI bots performing tasks on our behalf). These are impressive and useful. But they're logical extensions of software evolution. It’s not magic.

No one has demonstrated even the beginnings of true general intelligence in machines. We're seeing powerful mimicry, not understanding. Famously, the Turing Test asked if a machine could hold a conversation so convincing that a human couldn’t tell it wasn’t human. For decades, this was seen as the holy grail of artificial intelligence, the moment a machine might prove it could “think.” The test turned out to be too easy by half. Passing it doesn’t prove intelligence. It just proves we’re easy to trick.

While both AI doomers and AI optimists overestimate its capabilities, it seems the doomers are the most prominent right now because fear draws eyeballs in. The AGI hype persists because it sells—drawing funding, headlines, influence. The apocalyptic narrative rests on several flawed assumptions that they might hope we won’t notice:

Intelligence doesn’t depend on what it’s made of (doesn't matter if it's silicon or carbon).

Wrong because: this assumption ignores embodiment, context, and continuity that make consciousness more than computation.

Consciousness emerges from complexity: stack enough layers and “neurons” and voilà, a soul appears.

Wrong because: A more complicated machine doesn’t equal consciousness.

Moral agency can be programmed. Machines can act ethically with no inner life.

Wrong because: Simulation isn't understanding. Ethics demands conscience. Conscience can't be coded.

The Myth of a Frozen Economy

Amodei's second mistake is equally dubious. He views our economy as a static machine, as if current job structures are frozen in amber. But free market economies are ecosystems in constant flux. To predict a "job apocalypse" assumes that today's jobs are permanent categories rather than evolving with technology, culture, and needs.

People in their 50s today (people like me) have lived through the rise and fall of entire industries. We've adapted. Reinvented. Pivoted. That's what free people in dynamic markets do:

The printing press may have “destroyed” the ancient need for scribes. It also moved publishing, journalism, copywriting, mass education, and marketing

The combustion engine didn't just replace horses; it spurred more oil refineries, automotive repair, road construction, and even global tourism

The internet didn't merely kill newspapers; it spawned digital marketing, content creation, ecommerce, UX design, cybersecurity... and AI

For every job AI automates, it will catalyze ten more in unnamed fields: AI alignment, digital ethics, hybrid design, human oversight, trust infrastructure as a few examples.

Amodei warns of mass displacement because he thinks like a central planner—as if history ends here. But the real world doesn't stand still. It metabolizes shocks, adapts, and reinvents. It always has.

What’s Next

We've spent thirty years circling the drain of the old industrial economy, mixed with a “knowledge economy” we built by exporting our manufacturing base abroad. We wanted flying cars, but instead we got 140 characters, as Peter Thiel quipped. That was probably less about failed imagination than it was the slow entropy of the postwar model.

Now we're finally on the edge of something else. This isn't doomsday. It’s the end of one era and another's beginning.

If AI really is the next general-purpose technology, we should expect upheaval. We are are likely to witness some businesses overreaching with a toxic mix of haphazard job cuts, some amount of ageism, and a reliance on systems that still hallucinate fictional data. Businesses that rely too much on still-nascent large language models will stumble. Or fall. And a severe correction will be due for leadership foolhardy enough to believe the AI hype without actually learning to deploy new tools effectively.

Yet we should also expect expansion in ways culty EA tech founders cannot compute. The AGI hype cycle thrives on fear dressed as foresight, but no one knows what work's future looks like—not even its most confident engineers. What we know is that humans aren't reducible to patterns in silicon. We are more than processors. We are creators, adapters, and reinventors.

AI is powerful. I use it daily: accelerating research, drafting documents, saving hours from tedious admin work. It's a tool, not destiny. It amplifies and accelerates, but doesn't replace, the human impulse to ask, wonder, create.

The real risk isn't that AI replaces us. The real risk is forgetting what we are and believing the materialist lie that we're nothing more than “wetware” biological machines. Once we accept that premise, we're close to calling ourselves what materialists assume we are: space zombies from planet Earth, performing tasks until our batteries run out. Consciousness isn't code. Jobs aren't fixed categories.

And humans aren't just upgraded hardware waiting for the next patch.

P.S. This essay was co-written with AI help. If that doesn't undercut the AI doomer narrative for you, it should. AI doesn't care if arguments hold together. It doesn't believe anything. That's my job.